|

Importance of transients in nature and in stereophony.

Now that the spate of new multi-channel stereophonic reproducing systems has decreased to a trickle, it seems timely to look again at the physical and psychological bases that govern directional hearing to discover why nothing has so far emerged to displace the familiar two-channel arrangement hitherto widely accepted. This article emphasises the time difference cue to direction and the role of transients as 'time tags' both in natural listening and in stereophony. Some experimental evidence is put forward in support of the conclusions which largely explains the failure of quadraphonic and other systems to make good their claims to 'all-round' localization and total realism.

Over the last ten years or so multichannel stereophonic systems have proliferated. The proponents of most of these systems have so far largely failed to spell out the objectives that they are pursuing, still less the means by which they hope to succeed. Not all of the systems aim at increased naturalness of reproduction; the so-called 'surround' category in particular were only designed to produce a (hopefully), pleasing effect. Mostly, however, the implication is that they are trying to recreate the natural listening situation to a greater extent than is possible with the now conventional two-channel arrangement. Efforts have met with varying degrees of success but none can claim to have said the last word on this complicated subject.

One cause of failure is surely the lack of awareness of the auditory cues that have to be provided if nature is to be effectively imitated. This article summarizes the generally accepted facts concerning directional hearing of which architects of stereophonic systems must take note if they hope to achieve realism.

The Pioneers

Tribute is due to the illustrious workers who have contributed so painstakingly and rigorously over a very long period to piece together the now considerable body of knowledge that exists concerning the ear and hearing. From the pinna which receives the pressure variations that constitute an acoustic stimulus, through the transducing members comprising the eardrum, stapes, cochlea, basilar membrane and hair cells, the action potentials they generate, their coding and mode of transmission along the fibres of the eighth nerve and its pathways and interconnections between the higher centres of the brain where the semantic and other significant information is extracted and utilized, would be long enough in itself to fill an article. A short list might include Helmholtz, Rayleigh, Stewart, Banister, Firestone, Stevens, Newman, von Bekesy, Fletcher, Snow, Wallach, Rosenzweig, de Boer, van Urk, Galambos, Davis, Jeffress, Wiener, Hirsch, Cherry, van Bergeijk and Deatherage, omitting many more, particularly of the most recent workers. Anyone who cares to follow the unfolding story through the medium of their published papers will find it a fascinating, if as yet incomplete one.

Before examining hearing in detail it is interesting to compare the sense of hearing with that of sight. Hearing, like sight, must have evolved primarily as a survival mechanism; the reaction to a sudden stimulus automatically posing the questions; 'What is it?' and 'Where is it?' In daytime the eye has a great advantage in that the geometrical laws of optics do a great deal of the work of locating and recognizing remote objects, a process employing large numbers of parallel channels which respond relatively slowly (fortunately for the cinema and TV). In the dark the ears become the first line of defence and by contrast provide only two channels, albeit capable of much more rapid response, and more processing is needed to extract meaningful information. From a complex sound stimulus the ears and brain together have to recognize which components cohere into groups identifying specific sources (the 'gestalten' of Cherry [★] 1. E C Cherry & B McA Sayers, Mechanism of binaural fusion in the hearing of speech. JASA vol. 29 (1957) p. 973.), their pitch and timbre, in which directions these lie, a rough idea of their distance and perhaps, from their reflections, something of the unseen environment. The process is essentially time-oriented, the response of the auditory complex being much more rapid than that of the visual. less than 100 discrete samples per second give an impression of visual continuity; to do the same aurally requires upwards of 20,000. At its most acute it can detect time differences of a few tens of microseconds.

Physical basis of localization

The simplest example of natural listening must be that of an observer exposed to sound from a single source a few metres distant, so that conditions in his vicinity approximate to plane wave propagation. If the direction of the source relative to the listener is arbitrary, i.e. it lies neither in the horizontal plane passing through his head nor in the median plane dividing his sphere of observation into left and right halves, the sounds reaching his eardrums will differ in time of arrival and will be modified spectrally by the effect of his own head as an obstacle in the sound, field and by their passage through the convolutions of his pinnae. The spectral modifications depend on both frequency and direction of arrival and become increasingly large and complicated at high frequencies. The time difference, however, is invariant with frequency and depends only on the difference in length of the paths from the source to the two ears. This lack of dependence on frequency gives the inter-aural time difference an overriding, importance in sound localization.

If the sound source emits a pure constant-amplitude sine wave the time difference manifests itself at low frequencies as a phase difference proportional to frequency. As frequency increases the phase difference becomes, equal to π radians at about 700 Hz for maximally left or right sources, when the path difference becomes half a wavelength. Above this, the phase shift in excess of π radians would lead to an ambiguous judgment were it not for the fact that at this frequency the head begins to be an obstacle in the sound field and introduces an amplitude difference that allows the ambiguity to be resolved [★] 2. T T Sandel, D C Teas, W E Feddersen & L A Jeffress, Localization of sound from single and paired sources. JASA vol. 27 (1955) p. 842. up to something like 1.2 kHz. Above this point the only meaningful cue resides in this amplitude difference, which is by no means so precise an indicator of direction as the time difference, being subject to perturbations due to local obstacles or wax in the ears or, in closed spaces, reflections from the boundaries. It is considered to be of secondary importance; the ear relies on it when it has to.

When the sound is complex as, for example, in the case of human speech it will contain many transients, i.e. identifiable singularities in the waveform; these restore the possibility of using at high frequencies the time difference cue that already provides a firm indication at low frequencies. Not only that, but the increased resolution possible at high frequencies permits increased accuracy of localization. Evidence from everyday life supports this argument; try, for example, to detect the position of a blackbird uttering its alarm call, consisting of long pulses of tone at about 3 kHz with long onset and decay times. The studied avoidance of sudden transients at the start and finish added to the choice of a frequency resulting in an ambiguous phase difference for large predators, leaves only the rather inaccurate amplitude cue which is subject to the confusing effects of obstacles and reflections. By contrast, its territorial and other song is rich in transients and presents little difficulty in locating the source.

It is difficult to overestimate the importance of transients in sound localization; a reliable mechanism clearly exists at low frequencies, but without transients it cannot be employed in that part of the frequency spectrum where the ear is at its most sensitive - from 1 to 6 kHz. With their aid the inter-aural time difference, the most important single parameter in sound localization, can be evaluated over the entire audible frequency range. The inter-aural time difference alone, however, will not determine direction; what it does is to define a surface on which the source must lie. This surface is, strictly, a hyperboloid of revolution about the axis through the listeners two ears, but negligible error results in practice from considering it as the asymptotic cone, and it is the apical angle of this cone that the time difference defines.

At this stage what have so far been considered as secondary cues come into play. The amplitude difference reinforces the left/right impression given by the time difference, whilst at frequencies for which its dimensions constitute a half wavelength or more the characteristic shape of the pinna, with its narrow and highly individual polar characteristic introduces spectral changes that every proud owner of a pair of ears will have learnt subconsciously to interpret as an indication of direction of arrival. In this connection a recent paper by Butler and Belenduik [★] R A Butler & K Belenduik, Spectral cues utilized in the location of sound in the median sagittal plane. JASA vol. 61 (1977) p. 1264. throws some light on the use of spectral variation for estimating elevation in the median plane. A characteristic irregularity in the frequency response around 6 kHz is identified that varies systematically as the source elevation changes.

These cues to localization are available to the observer without any conscious action on his part. If, additionally, he is free to move his head he can learn more about the position of the source. By observing the change in its apparent position as he turns his head from left to right he can immediately tell whether it is in front of or behind him. He can also use the first derivative - the rate of change of inter-aural time difference as his head turns - to estimate its elevation (Wallach [★] H Wallach, On sound localization. JASA vol. 10 (1939) p. 270., de Boer and van Urk [★] K de Boer & A T van Urk, Some particulars of directional hearing. Philips Tech. Rev. vol. 6 (1941) p. 359.).

By these means the listener has localized the source to a unique direction in space. It only remains to estimate its distance.

This parameter is the one that he has the least satisfactory means of determining. Loudness is a possible cue, but postulates prior knowledge of the strength of the source; in the case of something familiar like a human voice a rough guess at distance can result. It has been suggested that the particle velocity/pressure ratio that increases during the approach to a small source could be instrumental - the difficulty here is that the ear has no obvious means of perceiving velocity. It is true that it could be done by the two ears acting in concert sensing it as pressure gradient, but the means by which the gradient could be derived from the pressures at the ears has not been disclosed. Moreover the method would be insensitive, the rise in particle velocity amounting to only 3 dB for an approach to within one radian of the source frequency. At 50 Hz a radian corresponds to about 1 metre, and at higher frequencies it is proportionately less. At one wavelength distant the rise is only 0.1 dB and quite undetectable.

In a closed space such as a room, the ratio of direct to reverberant sound is a quantity that gives some idea of distance but it begs the question as to how the direct and reverberant components are differentiated. In view of the confusion caused by reflections the initial judgment of direction must rely almost entirely on transients of medium and high frequency since these are the only cues available in advance of the reflections. Perhaps the relative magnitude of transients to the total sound gives some idea of distance. Certainly these considerations give added emphasis to the importance of transients in localizing sound sources.

Having pinpointed the position of the source using the means outlined, the natural reaction - no doubt a relic of the primitive survival situation - is for the listener to turn and face it. This immediately brings it into the region of greatest perceptual acuity; all the asymmetries disappear and the accuracy of location is determined by the limiting angular discrimination in azimuth. Numerous investigators had experimented on this topic: typical results are those of Moir and Leslie [★] J Moir & J A Leslie, Stereophonic reproduction of speech and music. J. Brit.IRE 1951 Radio Convention., who found that less than 2° was detectable. This corresponds to an inter-aural time difference of a mere 22μS or so.

Psychophysiology of Hearing

Nature of the Transducer.

The foregoing describes the physical situation of a listener exposed to a sound source. If he is to be provided by artificial means with stimuli that affect him in the same way, it is necessary to discover something of the psychoacoustic processing that constitutes his response, so that a realistic impression may be created. We have to know what cues can be and must be provided.

It is not appropriate here to enter on a detailed discussion of the ear complex: there is a very extensive literature on the subject should any reader wish to search more deeply than the present article allows. A good starting point is Fletcher [★] Harvey Fletcher, Speech and Hearing in Communication. Van Nostrand, 1953..

In summary: the visible portion of the auditory mechanism, the pinna, plays a not very significant role in hearing. It probably performs an energy collecting function and from its convoluted shape introduces characteristic colourations that its owner has learned to interpret as front/back and/or up/down information. Its small size restricts these functions to high frequencies, say 4 kHz and above.

Within the ear the sound impinges on a membrane - the eardrum - whose motion is transmitted by way of a linkage of small bones to a further membrane - the oval window - that in turn transmits the sound energy to a liquid-filled tapered canal known as the cochlea from its coiled configuration, similar to a snail shell. The small bones that form the linkage are so proportioned that they provide an impedance match between the eardrum and the cochlea.

Running centrally down the cochlea and dividing it into two parts lies the basilar membrane, narrow regions of which resonate in response to the different frequency components of the energy reaching the oval window, in a crude Fourier analysis. High frequency components cause resonance near the oval window at the basal end of the basilar membrane whilst progressively lower frequencies shift the activity down toward the apical end. There is thus a ready-made 'place' mechanism of frequency discrimination, though the mechanical constants of the system are not in accord with the known discriminatory capability of a listener. If place alone were involved a 'Q' of several hundred would be necessary instead of the value that actually obtains - about three. This low value is necessary to ensure a rapid response. One must therefore assume that critical frequency resolution results from neural processing higher in the chain.

Along the length of the basilar membrane and cooperating with it to produce the initial response to a sound stimulus is the organ of Corti, comprising the hair cells that are thought to play an important part in originating this response.

Neural Response

The processing that takes place in the brain is electrochemical in nature and is thus not suitable to operate on an electrical signal corresponding to the sound pressure waveform. That being so, there is no reason why an electrical analogue of the waveform should appear during stimulation. Such an analogue, however, can be detected by electrodes suitably placed on the head and neck. This response is called the 'cochlear microphonic', but it is not believed to play any part in subsequent processing. Possibly it is instrumental in initiating those neural signals that the brain does process, and which carry in coded form all the information subsequently extracted and recognized.

The eighth nerve, concerned with the sense of hearing, comprises at the peripheral (cochlear) level, a bundle of between 20,000 and 30,000 fibres, evenly distributed along the length of the basilar membrane, where they originate in the region of the hair cells. When the excitation of the basilar membrane adjacent to a nerve fibre ending reaches a certain threshold an 'action potential' is generated, supposedly through the intermediary of the hair cells. This action potential, which propagates electrochemically along the fibre, bears little relationship to the stimulus waveform, consisting of a short pulse of the order of 1 ms long, of standardized amplitude, repeated at intervals if the stimulus is maintained, at a rate depending on the stimulus intensity, but not exceeding 300 to 400 pulses per second in any single fibre. As the stimulus intensity is raised beyond the threshold, the fibre responds to an increasingly wide range of frequency, so that further processing must take place if the known fine limits of pitch perception are to be achieved. The behaviour of neural fibres was studied, notably by Galambos and Davis [★] 8 - R Galambos & H Davis, Response of single auditory nerve fibres to acoustic stimulation. J Neurophysiology vol. 6 (1943) p. 39., who worked with cats: their results are therefore conditioned by the differences between cat and human physiology. The neural mechanisms, however, operate on the same principles.

The question inevitably poses itself - how can the brain, however cunningly organized, extract the detailed information that it does from signals that apparently bear so little relation to the incoming stimulus? We are here concerned mainly with the localization problem, so that it is proposed to discuss only those cues relevant to that purpose. For more general reading, an excellent review was written by Whitfield [★] 9- I C Whitfield, Coding in the auditory nervous system. Nature 25th Feb. 1957, p. 756 et seq. in 1957, most of which appears still valid today.

To return to the action potential; let us examine some of its known characteristics in the light of the localization problem. It seems that although the rate of firing depends on stimulus intensity, successive firings of the same fibre are always separated by an integral multiple of the stimulus period. This strongly suggests synchronism, and in fact the firings relate to the zero-crossing times of the stimulus (in one direction only). As is usual in nature, things are not perfectly tidy, and there is a delay, known as 'latency' between the onset of a stimulus and the generation of a spike action potential. The latency is least for strong stimuli and for high rates of zero-crossing, which suggests that a threshold has to be overcome -however the disturbance to synchronism is not great.

Additionally, it has been remarked already that transmission of the action potential impulses along the nerve fibres is not purely electrical, consequently we are not considering velocities of the same order as that of light. The process is more complex, involving ion exchange between the inside and outside of the fibre, and the speed is dependent on a number of factors, including the fibre diameter and the intensity of the original stimulus. It ranges from several hundred down to one or two metres per second in the fine fibres of the auditory cortex. This slow propagation is significant since it makes available the time delay parameter as one of the processing tools with which the brain can operate on the neural signals from the two ears. Significantly, innervation from both ears takes place quite early in the neural pathways, at the superior olivary complex. It is difficult to imagine any other purpose of such an arrangement than a close comparison of the two sets of neural signals.

Regrettably, at this point the trail peters out for the moment, and the processing that eventually results in localization itself is still a matter of conjecture. Models have been proposed by several workers, notably Jeffress [★] 10 - L A Jeffress, A place theory of sound localization. J Comp. Physiol. Psychol. vol. 41 (1948) p. 35. and van Bergeijk [★] 11 - W A van Bergeijk, Variation on a theme of Bekesy. A model of binaural interaction. JASA vol. 34 (1948) p. 1431. and although these are interesting as far as they go, they are incomplete. They do, however, rely on the inter-aural time difference cue which is coded into the total neural response by the near synchronism of the spikes of action potential, with the vibrations of the sound stimulus as they appear at the basilar membrane. It seems that for all intensity levels well above threshold there will be at least one action potential pulse generated for every individual cycle of the incoming waveform by virtue of the large number of fibres involved. This holds good up to the limiting frequency at which one period of the stimulus is comparable with the duration of the spike: say 1 kHz or so.

Nature has taken too much care over the preservation of the interaural time difference in the transcoding of the linear input signal into the non-linear neural response for this to be the result of a happy accident. It must surely indicate the importance of such a cue in the task of localization.

The Stereophonic Image

In the present context stereophony excludes systems that merely set out to make a pleasing effect, such as the quadraphonic class - some spatial correspondence between the sound sources in the recording studio and the images created in the reproducing room is implied. We have to consider how, and to what extent, the natural cues in directional listening can be provided artificially by stereophonic reproducing systems.

Discrete Stereophony

As is well known, a minimum of two channels is needed to form any sort of sound image. The simplest arrangement envisages a pair of earphones driven through identical transmission channels from a pair of microphones mounted to simulate the ears on a dummy head. In this case the listener can be presented directly with the inter-aural time difference cues appropriate to the positions of the various sound sources around the dummy head. The arrangement does provide a good spatial impression, to which there are two main drawbacks.

First, because the headphones move with the head, those cues associated with head movement in a free field are absent, and although the time difference cue gives good directional information, listeners usually describe the sources as being 'in the head' - which is, to say the least, unnatural. Second, the wearing of headphones is in itself unaesthetic: it is far preferable to receive the sounds through one's own two ears from freely propagating acoustic waves.

Free-field Stereophony

When we consider reproduction from a pair. of symmetrically spaced loud-speakers we have to take account of the fact that each loudspeaker communicates with both of a listener's ears. Thus, to produce an inter-aural phase difference it is not appropriate to drive the loudspeakers with signals differing in phase, as in the case of headphones. As Blumlein propounded in 1931 [★] 12 - A D Blumlein, BP 394325., to produce phase differences at the ears, the loudspeakers must be driven by signals in-phase but of different magnitude. A recapitulation of his analysis can be found in a paper by Clark, Dut- ton and Vanderlyn [★] 13 - H A M Clark, G F Dutton & D B Vanderlyn, The 'Stereosonic' recording and reproducing system. Proc. IEE vol. 104 part B (1957) p. 417. of 1957.

As a phase difference proportional to frequency is equivalent to a constant delay, a realistic interaural time difference cue can be simulated. The analysis postulates that the wavelength is long compared with the ear spacing and thus that the attenuation at the further ear due to the shadowing effect of the head itself is negligible. This condition is met at low frequencies but starts to break down at about 700 Hz, when the inter-aural path becomes half a wavelength: above this frequency it is necessary to seek some other mechanism of localization.

High Frequency Cues

Many workers in the field have, from the earliest days, looked to the inter-aural amplitude difference, which is most pronounced at high frequencies, to explain the undoubted directional capability of the ear in this region. Whilst a good impression of leftness or rightness in the horizontal plane can be produced by a simple amplitude difference there are two main difficulties in ascribing to it the entire mechanism of high frequency localization. First, stereo reproducing systems work much too well in the presence of room reflections and additionally they are much too sensitive to small displacements of the listener from the central position between the two loudspeakers. These considerations strongly suggest that transients play as important a role in stereo reproduction as they do in natural listening. Experiments carried out by Percival [★] 14 - W S Percival, Stereophonic reproduction with the aid of a control signal. EMI Research Laboratories Report RN/ 108 (1957). in 1957 confirm this, and suggest the following hypothesis.

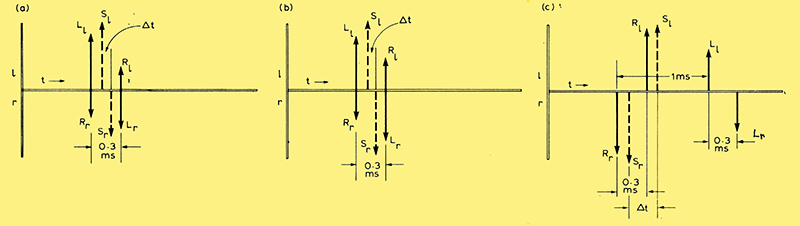

Fig. 1 - Timing diagram, shows generation of a virtual time difference Δt from components fixed in time but of variable relative amplitude.

Localization using transients depends on the integrating capability of the ear. The situation is illustrated in Fig. 1(a). The left and right ear responses are juxtaposed to show the relative timings. At an arbitrary time the left ear, for example, receives an impulsive signal Ll from the left loudspeaker and simultaneously the right ear receives a signal Rr from the right loudspeaker. This follows from the symmetry of the listening geometry. An image to the left of the sound stage is assumed; hence Ll is shown greater than Rr. After an interval equal to the extra time taken by these sounds to travel to the contralateral ears, which, for a ±30° loudspeaker spacing will be approximately 0.3 ms, the left ear will receive Rl and the right ear Lr. This later pair of signals will be reduced in amplitude as a result of their diffraction round the head. It is now suggested that the integrating capability of the ear - that is, its failure to resolve two impulses monotonically presented at an interval of less than 2 to 3 ms, - will cause each ear to hear a single signal at a virtual time dependent on its component parts. The resultant summed signals Sl and Sr, shown in broken line in the diagram, will have virtual timings biased toward their larger components. The significant parameter is the equivalent inter-aural time difference Δt between Sl and Sr, which will determine the apparent direction when the ear-brain complex fuses them into a single stereophonic image at a direction determined by the loudspeaker amplitudes L and R.

The theory clearly holds for the central case when all is symmetrical, and for the limiting cases when one loudspeaker is silent and the delay corresponds to the direction of the energized one. However, the signals shown in the diagram are over-simplistic, since they represent impossibly short impulses that would be severely mutilated in passing through filters and transducers, not to mention the peripheral mechanisms of the ear itself. The figure is really no more than a timing diagram, showing the fixed time relationship between the variable amplitude component sounds at the two ears. What it implies is that at some time after the onset of the partial stimuli indicated by the thick arrows of Fig. 1, the integral of the energy received by one ear will reach a threshold value before the other, depending on the relative amplitudes of these partial stimuli, and an action potential will be generated at that ear earlier than at the other, as though it had resulted from a single virtual stimulus with a timing indicated by the thick broken arrow.

In Fig. 1(c) the position of the virtual stimulus may seem strange, but remember that if the second pair of partial stimuli are significantly delayed, the response tends to separate into two parts associated with the two components, and the second partial stimulus will have little effect on the first. This could indicate a time-weighting in the integration, the running integral having an effective decay time of a few milliseconds: clearly the integral cannot be allowed to build up indefinitely.

Experimental Evidence

Some light is thrown on this theory by an experiment in which an attempt is made to recentralize the perceived image by delaying the signals to one or the other ear. Two symmetrically placed loudspeakers, driven in-phase at different amplitudes, produce signals at a pair of non-directional pressure microphones spaced 20 cm apart, representing the relative ear positions of a hypothetical listener. To eliminate any effects due to head shadowing, no dummy head is used: the only variable is the relative amplitude of the fixed time components of Fig. 1(b) as determined by the loudspeaker signals. The amplified microphone outputs are fed to delay networks arranged to produce a differential delay in either sense up to a maximum of 0.35 ms, and thence to the left and right receivers of a pair of stereo headphones. Assuming that integration takes place independently in each ear it should be unaffected by the interposition of the delays in the microphone channels, and it should be possible to shift the signals of Fig. 1(b) relative to each other by an amount -Δt until the summed signals Sl and Sr synchronize to produce a central image.

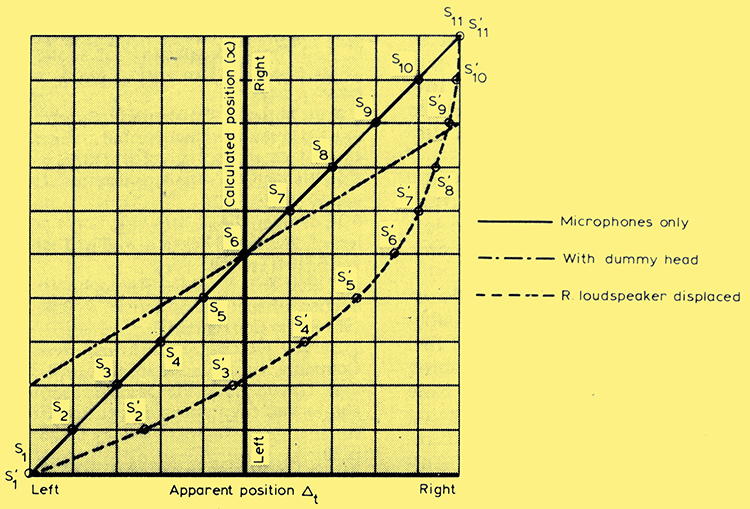

Fig. 2 - Central listening results in linear stage geometry as virtual sources S1 to S11 from equal increments of x, require equal increments of Δt to restore centrality. Asymmetric listening (broken curve) results in stage geometry distorted as the same virtual images are drawn towards the nearer loudspeaker. Dummy head (dot-dash curve) enhances the cue without affecting linearity..

Denote (LbR)/(L +R) by x, and call this normalized amplitude difference the calculated position of the source. Against this is plotted the normalized angle of incidence of a sound wave at the microphone position which would give rise to the inter-aural time difference Δt determined experimentally. The full-line curve of Fig. 2 shows there is good correspondence between the theoretical and experimental values, which represent the average of six observers' results.

These results were not obtained without difficulty. It was pointed out previously that the signals of Fig. 1 are fictitious, and it was proposed initially to ensure that vestigial low frequency cues were absent by using a high frequency tone pulse with rapid onset and slower decay time. Difficulties were encountered with interference fringes due to the tone which, with the unavoidable small asymmetries of the experimental set-up, produced spurious amplitude differences at the microphones. To overcome this, random noise, band-limited by a 2 kHz high-pass filter was substituted for the tone and modulated with the same pulse waveform. This improved matters considerably but the final image produced in the headphones was still not so well defined as the actual image at the microphone position in the anechoic room. The results, nevertheless, are sufficiently clear cut to show that an effective inter-aural time difference cue is produced when the loudspeakers are driven with high frequency transient signals, in-phase but of different amplitude, without benefit of any head shadowing effects.

The Effect of the Head

Because diffraction round the head undoubtedly plays a significant part in live listening the experiment was made more realistic by introducing a dummy head between the microphones. This was made of plaster, but wrapped in polyurethane foam so as to simulate human flesh a little more closely. The result of this was to enhance the angular sensitivity of the arrangement, requiring more compensating delay for a given amplitude difference at the loudspeakers, as shown by the dot-dash curve of Fig. 2. This additional delay must represent a trade-off of time differences against the intensity differences introduced by head shadowing.

The enhanced angular sensitivity of the stereo listening arrangement at high frequencies was noted in ref. 13 as an empirical finding. In those early experiments the angular displacement of the image at low frequencies as the speaker amplitudes were varied agreed closely with that found in the present case in the absence of the dummy head, although two quite different mechanisms are involved. At high frequencies the excess sensitivity is now shown to be due to the presence of the head with its attendant diffraction pattern. The magnitude of the increase is of the right order to account for the difference. Before concluding the tests one further experiment of significance was performed to demonstrate the effect of asymmetrical listening. Reverting to the set-up without the dummy head, the right loudspeaker only was brought one foot nearer the microphones and the first experiment repeated. This is approximately the same as displacing the listener the same amount to the right of centre, but more convenient experimentally.

Fig. 1(c) shows how the timings are affected. The sound from the right loudspeaker clears both ears before that from the left reaches either, about 1 ms later. By this time a fully right-handed cue is in course of establishment which the later sound from the left loudspeaker has difficulty in modifying. One can thus expect a strong bias to the right and this is indeed what happens, as is evident from Fig. 2. The broken curve shows that values of x corresponding to apparent sources S1 and S11 for the central listening position, five to the left and five to the right of stage centre, now yield a distorted image with only two (S1, S2) to the left and eight (S4 to S11) on the right, crowding up toward the loudspeaker position.

Moreover, this effect is not one which scales up or down with the size of the reproducing set-up. The result of a foot of misalignment is the same whether we are operating in a domestic living room or the Albert Hall. It is one of the principal hazards in the way of mounting a large scale demonstration of stereophony, as anyone who has made the attempt will be only too aware. The implications for quadraphonic systems are obvious. but their proponents seem never to have faced up to them. All two-channel systems have a locus on which it is possible for a listener to position himself equidistant from the loudspeakers. For three channels there will always be one point that meets this criterion, but four channels do not provide even this vestigial possibility unless great care is taken in siting the loudspeakers - errors must be small compared with a foot. Nevertheless this factor seems to be universally ignored: much effort is expended on analysing the energy distribution around a point-sized listener, tacitly assumed to be exactly equidistant from every reproducer. Yet a little matter of a foot of error in the placing of one of them can distort the image geometry out of all recognition. Consideration of the directional distribution of energy may perhaps be appropriate under steady-state conditions. Speech and music, however, together with most other everyday sounds conveying useful information to a listener do not fall in this category. In contrast they may be more realistically considered as a stream of connected transients.

It is more than probable that, given additional channels, better stereophony can be achieved than with the basic two which economic stringency has hitherto allowed us. However, before we adopt any alternative multichannel system as an industry standard we ought to be clearer than we are now about its psychoacoustic basis, its aims and objects and the means by which these are to be put into effect.

Conclusion

The practice of stereophony comprises two facets: the understanding of how a listener uses his ear/brain complex to locate the sources of sounds under natural conditions, and the engineering of means to reproduce enough spatial cues from a multiplicity of loudspeakers to recreate an effective image at another place or time.

We are still a long way from writing a full description of the total mechanism of localization. What is clear is that nature employs all the auditory clues available at all times, but they vary in effectiveness according to circumstances. The present article is intended to highlight the important role played by transients in nature and in stereophony - in the last instance it might well form an addendum to reference 13.

The experiments described are not proof that an actual inter-aural time difference is generated by the method described. Because the cue derived in Fig. 1(b) can be compensated by the introduction of a contrary time differential it is not rigorous to assume it consisted of a time difference in the first place: many workers (e.g. ref. 15 [★] 15 - L L Young & R Carhart, Time-intensity trading functions for pure tones and a high frequency AM signal. JASA vol. 56 (1974) p.605.) have shown that time differences can be traded directly with, for example, intensity differences. But the fact that the compensating delay in the experiment trades off linearly against the differential cue provided, arriving at the precise value of inter-microphone delay in the fully left or right condition when only one loudspeaker is operating, suggests strongly that an effective inter-aural time difference is indeed created.

Obviously, the additional intensity difference cue resulting from the insertion of the dummy head has to be traded off against an additional time delay (see the dot-dash curve of Fig. 2).

The experiment on asymmetric listening underlines an everyday experience in stereophonic listening. In this case it should be noted that only transients are involved; the low frequency cues to direction are not so fragile in the presence of small displacements. This very fragility, however, merely serves to underline the powerful influence exerted by transients, particularly in the reflective surroundings in which most stereophonic reproduction takes place.

The experiments are neither exhaustive nor conclusive and could probably be extended and elaborated, but I feel they go some way toward establishing the prime role of the interaural time difference cue in stereophonic listening and unravelling some of the mechanisms through which it works. Any stereophonic system designed to operate without regard to this cue does so at its peril.

Acknowledgments

My thanks are due to many colleagues, in particular Dr W S Percival, for numerous day-to-day discussions, mostly of some fifteen years ago, that have been responsible, along with the personal experience of living with stereo since its inception, for distilling the material of this article. Acknowledgements are also due to the Directors of EMI Limited for permission to publish it.

Philip Vanderlyn joined EMI in 1935, working under A D Blumlein and H A M.Clark from where he learned the facts of stereophonic life. Experience at EMI up to retirement in 1979 included work on stereophony, sound locators, radar, data transmission and the application of digital techniques to sound recording. Born in London in 1913, he was educated at Christ's Hospital and Northampton Poly-technic.

References

- E C Cherry & B McA Sayers, Mechanism of binaural fusion in the hearing of speech. JASA vol. 29 (1957) p. 973.

- T T Sandel, D C Teas, W E Feddersen & L A Jeffress, Localization of sound from single and paired sources. JASA vol. 27 (1955) p. 842.

- R A Butler & K Belenduik, Spectral cues utilized in the location of sound in the median sagittal plane. JASA vol. 61 (1977) p. 1264.

- H Wallach, On sound localization. JASA vol. 10 (1939) p. 270.

- K de Boer & A T van Urk, Some particulars of directional hearing. Philips Tech. Rev. vol. 6 (1941) p. 359.

- J Moir & J A Leslie, Stereophonic reproduction of speech and music. J. Brit.IRE 1951 Radio Convention.

- Harvey Fletcher, Speech and Hearing in Communication. Van Nostrand, 1953.

- R Galambos & H Davis, Response of single auditory nerve fibres to acoustic stimulation. J Neurophysiology vol. 6 (1943) p. 39.

- I C Whitfield, Coding in the auditory nervous system. Nature 25th Feb. 1957, p. 756 et seq.

- L A Jeffress, A place theory of sound localization. J Comp. Physiol. Psychol. vol. 41 (1948) p. 35.

- W A van Bergeijk, Variation on a theme of Bekesy. A model of binaural interaction. JASA vol. 34 (1948) p. 1431.

- A D Blumlein, BP 394325.

- H A M Clark, G F Dutton & D B Vanderlyn, The 'Stereosonic' recording and reproducing system. Proc. IEE vol. 104 part B (1957) p. 417.

- W S Percival, Stereophonic reproduction with the aid of a control signal. EMI Research Laboratories Report RN/ 108 (1957).

- L L Young & R Carhart, Time-intensity trading functions for pure tones and a high frequency AM signal. JASA vol. 56 (1974) p.605.

|